Before starting, this is the first part of an experiment on how far can the custom node go. The final result is very messy and expensive, and I doubt it could have any practical use or advantage over other existing techniques or engine-level integrated SDF solutions.

With that being said, this may give you new ideas on how to approach other problems, or some insights on how SDF work. So take a seat and take a look at how monsters are made :3

|

Lets create shapes using the custom node! For now lets make some raymarch with sdf (signed distance function). We will make this by using a .ush file with a custom node. Keep in mind that this can be done directly inside of the custom node, but for clarity reasons Ill be using an external file.

I did a post on how to work with external files in the custom node, if you are not sure how to do it give it a look . It also shows how to get most of the nodes in code ( such as camera position, world position, etc ) and where to start to search for that sweet hlsl code unreal uses under the hood.

What is a distance field?

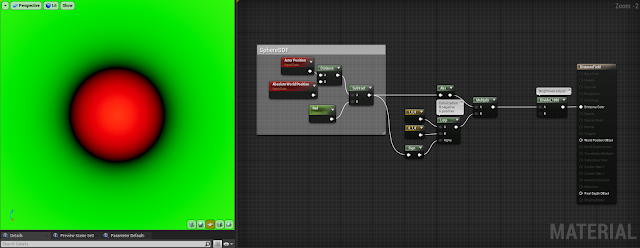

If you wonder what the hell a distance field is, its basically a function that gives the distance from a point to a given shape. The sphere SDF formula is distance = length(point) - radius . You can visualize it with the following material, where the negative values (inner points) are red, and the positive ones are green:The good part is that this gives you an easy way to create more complex shapes with minimum operations, since all that determines the shape is a simple float. The additive boolean between tho spheres will be a min operator:

|

| Mixing shapes is very simple and cheap using SDFs! |

Setting up the raymarch

First lets make a basic sphere to sety things up. There is tons of resources on how to make raymarching, so I wont go into much detail about how it work. In this case we will use sphere tracing, a raymarch method based on finding the distance to a shape instead of testing in regular intervals ( this is basically an optimization for solid shapes).So we start with a direction ( camera vector) and point.

We check if a point is at a certain distance from a certain shape, in the next iteration we test the point again but moved along in the direction the distance we checked (we are sure that along that distance there are no shapes).

If the distance is small enough we assume that we hit the shape and we stop iterating and return one.

|

| Basic sphere marching recap |

//SphereTracing

float SphereTrace(int steps){

float output = 0;

float distance;

float lastDistance;

float3 p = Parameters.AbsoluteWorldPosition;

float3 actorPos = GetPrimitiveData(Parameters.PrimitiveId).ActorWorldPosition;

float3 direction = Parameters.CameraVector;

for (int i=0; i < steps; i ++)

{

lastDistance = distance;

distance = sdSphere (p, actorPos, radius);

if(distance < MinDistance)

{

output = 1;

return output;

}else

{

p -= direction* distance;

}

}

return output;

}

The sphere distance function gives the distance to the sphere surface:

//Sphere

float sdSphere (float3 p, float3 c, float s)

{

return distance(p,c) - s;

}

This will start sampling at the polygon surface using p as current sampling point. I added the actor position as the center to make the sphere visible outside the world origin.

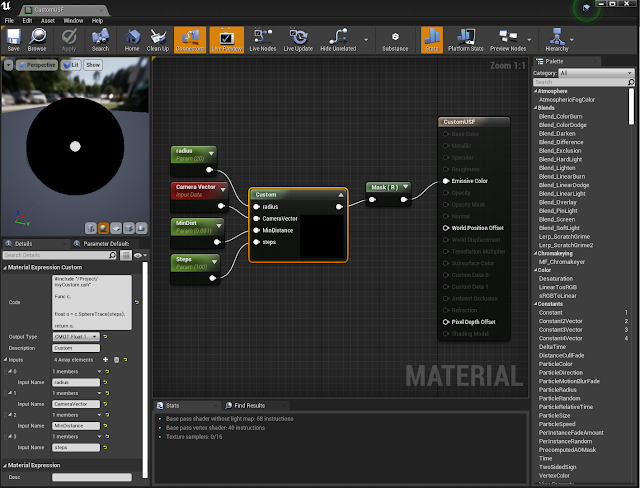

Doing this we just need to set up a custom node that feed the camera vector, the amount of steps, the minimum distance and the radius of the sphere.

Debugging the iterations

First lets see how many samples are we doing to understand the limitations of the sphere tracing. To see this, we just need to replace the output:output = (float) i / steps;

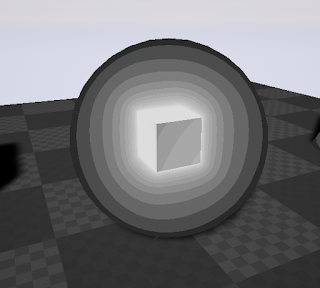

This will return pure white when performing all steps, and pure black when performed none. This give us an accurate idea of how sphere trace work:

Notice how out of the sphere is performing the complete loop, we can avoid this by creating a "shell" to trace in. The geometry work as a shell for the front part, but as we dont know the back geometry extent, we can set by code a basic generic shape. Lets use a sphere for this example, we just have to stop iterating when out of bounds.

//SphereTracing

float SphereTrace(int steps){

float output = 0;

float dist;

float lastDistance;

float3 p = Parameters.AbsoluteWorldPosition;

float3 actorPos = GetPrimitiveData(Parameters.PrimitiveId).ActorWorldPosition;

float actorRad = GetPrimitiveData(Parameters.PrimitiveId).ObjectWorldPositionAndRadius.w;

float3 direction = Parameters.CameraVector;

for (int i=0; i < steps; i ++)

{

//BackCageCheck

if(IsValidPoint(p, actorPos, actorRad)){

lastDistance = dist;

dist = sdSphere (p, actorPos, radius);

if(dist < MinDistance)

{

output = (float)i/steps;

return output;

}else

{

p -= direction* dist;

}

}else{

return (float)i/steps;

}

}

return (float)i/steps;

}

//Cage

bool IsValidPoint(float3 p, float3 aPos, float aRad)

{

return distance(p, aPos)< aRad ? true : false;

}

//Sphere

float sdSphere (float3 p, float3 c, float s)

{

return distance(p,c) - s;

}

In case we use a cube or other shape as a cage, we will have to start iterating where the ray intersects with the shape, and stop once it gets out. This comes handy but to make things simpler, lets keep using a shpere.

|

| Now we have an appropiate debug outside the shape |

dist = sdBox(p - actorPos,radius);

//Box

float sdBox( float3 p, float3 b ) {

float3 q = abs(p) - b;

return length(max(q,0.0)) + min(max(q.x,max(q.y,q.z)),0.0);

}

As you can see, most of the iterations happen in the borders of the shapes (and yes, you can use as ambient occlusion). We can help reduce this by increasing the MinDistance. Keep in mind that with a lower MinDistance the quicker we will run out of iterations on surfaces very aligned with the camera, and if you set it too high the shape will start showing artifacts.

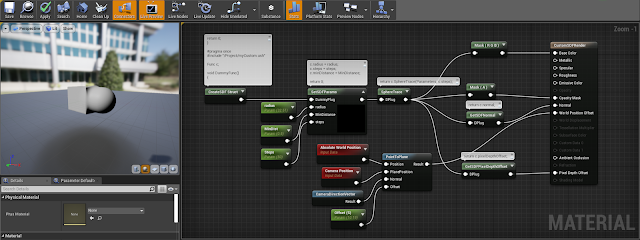

Cleaning the material

If we want, we can integrate more the actual shader in the material editor to make it more flexible. For this, lets fist divide the current node in multiple nodes. One for the struct creation and other for the actual trace (take a look at the last post).ush file:

struct Func

{

float radius;

float minDistance;

float steps;

float output;

struct Func

{

float radius;

float minDistance;

float steps;

float output;

//SphereTracing

float SphereTrace(FMaterialPixelParameters Parameters, int steps){

float dist;

float lastDistance;

float3 p = Parameters.AbsoluteWorldPosition;

float3 actorPos = GetPrimitiveData(Parameters.PrimitiveId).ActorWorldPosition;

float actorRad = GetPrimitiveData(Parameters.PrimitiveId).ObjectWorldPositionAndRadius.w;

float3 direction = Parameters.CameraVector; output = 0;

for (int i=0; i < steps; i ++)

{

//BackCageCheck

if(IsValidPoint(p, actorPos, actorRad)){

lastDistance = dist;

dist = sdBox (p - actorPos, radius);

if(dist < minDistance)

{

output = (float)i/steps;

return output;

}else

{

p -= direction* dist;

}

}else{

return (float)i/steps;

}

}

return (float)i/steps;

}

//Cage

bool IsValidPoint(float3 p, float3 aPos, float aRad)

{

return distance(p, aPos) < aRad ? true : false;

}

//Sphere

float sdSphere (float3 p, float3 c, float s)

{

return distance(p,c) - s;

}

//Box

float sdBox( float3 p, float3 b ) {

float3 q = abs(p) - b;

return length(max(q,0.0)) + min(max(q.x,max(q.y,q.z)),0.0);

}

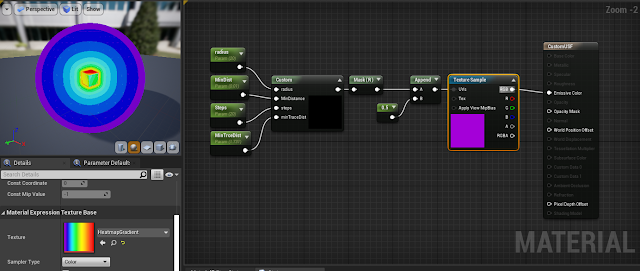

Now we do the same in separate nodes, this will allow us to add and move shapes more easily in the material editor! ^^

Now lets move the shape distance check to a separate function:

//BackCageCheck

if(IsValidPoint(p, actorPos, actorRad)){

lastDistance = dist;

dist = Map(Parameters, p);

float Map(FMaterialPixelParameters Parameters, float3 p){

float3 actorPos = GetPrimitiveData(Parameters.PrimitiveId).ActorWorldPosition;

return sdBox (p - actorPos, radius);

}

Now everithing should look the same, lets calculate the normal of the shape just by sampling near points and normalizing the result:

We add a float3 to our struct to store the normal values

float3 normal;

Create the normal function

float3 CalcNormal (FMaterialPixelParameters param,float3 p)

{

const float d = 0.001;

return normalize

(

float3

( Map(param, p + float3(d, 0, 0) ) - Map(param, p - float3(d, 0, 0)),

Map(param, p + float3(0, d, 0) ) - Map(param, p - float3(0, d, 0)),

Map(param, p + float3(0, 0, d) ) - Map(param, p - float3(0, 0, d))

)

);

}

And calculate the normal value once we hit the shape

if(dist < minDistance)

{

output = (float)i/steps;

normal = CalcNormal(Parameters, p);

return output;

}

Now if we get the normal value with a custom node we can plug it into the normal input of the material. But you may notice that the normals are not correct, this is because they are in world space. To correct this you can disable the tangent space normal in the material settings, or use the transformVector node(world to tangent).

Now that we have normals, lets add a output color parameter. This is as simple as make the output varable a float4, rename it to color and set it one if we hit the shape. Remember also to set the SphereTrace function return to float4 as well as the ShpereTrace node to output float4.

float radius;

float minDistance;

float steps;

float4 color;

float3 normal;

//SphereTracing

float4 SphereTrace(FMaterialPixelParameters Parameters, int steps){

float dist;

float lastDistance;

float3 p = Parameters.AbsoluteWorldPosition;

float3 actorPos = GetPrimitiveData(Parameters.PrimitiveId).ActorWorldPosition;

float actorRad = GetPrimitiveData(Parameters.PrimitiveId).ObjectWorldPositionAndRadius.w;

float3 direction = Parameters.CameraVector;

color = 0;

for (int i=0; i < steps; i ++)

{

//BackCageCheck

if(IsValidPoint(p, actorPos, actorRad)){

lastDistance = dist;

dist = Map(Parameters, p);

if(dist < minDistance)

{

color = 1.f;

normal = CalcNormal(Parameters, p);

return color;

}else

{

p -= direction* dist;

}

}else{

return color;

}

}

return color;

}

float Map(FMaterialPixelParameters Parameters, float3 p){

float3 actorPos = GetPrimitiveData(Parameters.PrimitiveId).ActorWorldPosition;

return sdBox (p - actorPos, radius);

}

float3 CalcNormal (FMaterialPixelParameters param,float3 p)

{

const float d = 0.001;

return normalize

(

float3

( Map(param, p + float3(d, 0, 0) ) - Map(param, p - float3(d, 0, 0)),

Map(param, p + float3(0, d, 0) ) - Map(param, p - float3(0, d, 0)),

Map(param, p + float3(0, 0, d) ) - Map(param, p - float3(0, 0, d))

)

);

}

//Cage

bool IsValidPoint(float3 p, float3 aPos, float aRad)

{

return distance(p, aPos) < aRad ? true : false;

}

//SDF shapes

//Sphere

float sdSphere (float3 p, float3 c, float s)

{

return distance(p,c) - s;

}

//Box

float sdBox( float3 p, float3 b ) {

float3 q = abs(p) - b;

return length(max(q,0.0)) + min(max(q.x,max(q.y,q.z)),0.0);

}

|

| Rejoice! we got a very expensive cube |

No more rect lines

Before going further lets make the boring cube a little more interesting adding to the shader the distance field signature feature: smooth blending. Adding other shape to the Map function does the trick, in this case lets add a sphere like so:

float Map(FMaterialPixelParameters Parameters, float3 p){

float3 actorPos = GetPrimitiveData(Parameters.PrimitiveId).ActorWorldPosition ;

float shapeA = sdBox (p - actorPos + float3(20.f,0.f,0.f), radius);

float shapeB = sdSphere( p - actorPos + float3(-20.f,0.f,0.f), - actorPos + float3(20.f,0.f,0.f), radius);

float blending = opSmoothUnion(shapeA,shapeB, 30.f);

return blending;

}

//SDF blending

float opSmoothUnion( float d1, float d2, float k ) {

float h = clamp( 0.5 + 0.5*(d2-d1)/k, 0.0, 1.0 );

return lerp( d2, d1, h ) - k*h*(1.0-h);

}

Now our shapes connect smoothly, there are many ways to round, twist, transform, and basically change our SDFs.

Special mention at the coefficient for blending, the 30.f in this case, this will determine how smooth the intersection is. You can use a variable to see how it changes in real time. For simplicity, I will keep it fixed.

Notice also how the shpere remains in the map center, so you can do this < 3:

Before going further lets make them a part of the world. To achieve this there are a couple of things to consider:

Depth integration

Well, this is now possible without using transparent materials thanks to

the pixel depth output of the material. The plan is to plug the shape

depth minus the usual polygon depth so we "move" the depth further

enough until it matches our shape.

|

| You can see the difference with a simple postprocess, left the regular sceneDepth, right the true distance to the scene. |

So lets output the offset in the color alpha, since it will less than 1 only when the shape is less than 1cm near the cage surface (this way you dont need to make a separate node for the pixelOffset). That being said, for consistency Ill make a separate node, but in the 99.99% of the cases there will be no difference.

In the output:

if(dist < minDistance)

{

float depth = CalculateDepth(p) ;

float initDepth = CalculateDepth(Parameters.AbsoluteWorldPosition);

float offsetDepth = depth - initDepth;

color = 1;

pixelDepthOffset = offsetDepth;

normal = CalcNormal(Parameters, p);

return color;

float CalculateDepth(float3 p){

float3 d = p -ResolvedView.WorldCameraOrigin;

float dt = dot( ResolvedView.ViewForward, normalize(d));

float dist = length(d);

return (dt * dist);

}

|

| Now we got a nice depth integration for our SDFs |

Making the cage invisible

|

| A handy material function ready to use |

Integrating the actor transform

All is nice and fancy, but there is a huge elephant in the room, the actor transform has no effect in our shapes. Lucky for us, this can be solved.

Lets make all the operations in local space and then translate them to world space. First we need to add the transform functions:

float3 transformPLW (float3 v)

{

return TransformLocalPositionToWorld(Parameters, v);

}

float3 transformPWL (float3 v)

{

return mul(float4(v, 1), GetPrimitiveData(Parameters.PrimitiveId).WorldToLocal).xyz;;

}

float3 transformLW (float3 v)

{

return TransformLocalVectorToWorld(Parameters, v);

}

float3 transformWL (float3 v)

{

return normalize (mul(v, GetPrimitiveData(Parameters.PrimitiveId).WorldToLocal).xyz) ;

}

The first two functions are the engine position transform functions, the last two are the vector transform ones. And now apply them in the current code:

//SphereTracing

float4 SphereTrace(FMaterialPixelParameters Parameters, int steps){

float dist;

float lastDistance;

float3 wp = Parameters.AbsoluteWorldPosition ;

float3 p = transformPWL (Parameters, wp);

float3 actorPos = GetPrimitiveData(Parameters.PrimitiveId).ActorWorldPosition;

float actorRad = GetPrimitiveData(Parameters.PrimitiveId).ObjectWorldPositionAndRadius.w;

float3 direction = transformWL (Parameters, Parameters.CameraVector);

color = 0;

for (int i=0; i < steps; i ++)

{

float3 pW = transformPLW(Parameters, p);

//BackCageCheck

if(IsValidPoint( pW , actorPos, actorRad)){

lastDistance = dist;

dist = Map(Parameters, p);

if(dist < minDistance)

{

float depth = CalculateDepth(pW) ;

float initDepth = CalculateDepth(wp);

float offsetDepth = depth - initDepth;

color = 1;

color.a = 1;

pixelDepthOffset = offsetDepth;

normal = transformLW ( Parameters, CalcNormal(Parameters, p) );

return color;

}else

{

p -= direction* dist;

}

}else{

return color;

}

Right now the material and the sader file should look like this:

struct Func

{

float radius;

float minDistance;

float steps;

float4 color;

float3 normal;

float pixelDepthOffset;

//SphereTracing

float4 SphereTrace(FMaterialPixelParameters Parameters, int steps){

float dist;

float lastDistance;

float3 wp = Parameters.AbsoluteWorldPosition ;

float3 p = transformPWL (Parameters, wp);

float3 actorPos = GetPrimitiveData(Parameters.PrimitiveId).ActorWorldPosition;

float actorRad = GetPrimitiveData(Parameters.PrimitiveId).ObjectWorldPositionAndRadius.w;

float3 direction = transformWL (Parameters, Parameters.CameraVector);

color = 0;

for (int i=0; i < steps; i ++)

{

float3 pW = transformPLW(Parameters, p);

//BackCageCheck

if(IsValidPoint( pW , actorPos, actorRad)){

lastDistance = dist;

dist = Map(Parameters, p);

if(dist < minDistance)

{

float depth = CalculateDepth(pW) ;

float initDepth = CalculateDepth(wp);

float offsetDepth = depth - initDepth;

color = 1;

color.a = 1;

pixelDepthOffset = offsetDepth;

normal = transformLW ( Parameters, CalcNormal(Parameters, p) );

return color;

}else

{

p -= direction* dist;

}

}else{

return color;

}

}

return color;

}

float Map(FMaterialPixelParameters Parameters, float3 p){

float shapeA = sdBox (p , radius);

float shapeB = sdSphere( p + float3(-20.f,0.f,0.f), float3(20.f,0.f,0.f), radius);

float blending = opSmoothUnion(shapeA,shapeB, 30.f);

return blending;

}

float3 CalcNormal (FMaterialPixelParameters param,float3 p)

{

const float d = 0.001;

return normalize

(

float3

( Map(param, p + float3(d, 0, 0) ) - Map(param, p - float3(d, 0, 0)),

Map(param, p + float3(0, d, 0) ) - Map(param, p - float3(0, d, 0)),

Map(param, p + float3(0, 0, d) ) - Map(param, p - float3(0, 0, d))

)

);

}

//Cage

bool IsValidPoint(float3 p, float3 aPos, float aRad)

{

return distance(p, aPos) < aRad ? true : false;

}

//SDF shapes

//Sphere

float sdSphere (float3 p, float3 c, float s)

{

return distance(p,c) - s;

}

//Box

float sdBox( float3 p, float3 b ) {

float3 q = abs(p) - b;

return length(max(q,0.0)) + min(max(q.x,max(q.y,q.z)),0.0);

}

float CalculateDepth(float3 p){

float3 d = p -ResolvedView.WorldCameraOrigin;

float dt = dot( ResolvedView.ViewForward, normalize(d));

float dist = length(d);

return (dt * dist);

}

//SDF blending

float opSmoothUnion( float d1, float d2, float k ) {

float h = clamp( 0.5 + 0.5*(d2-d1)/k, 0.0, 1.0 );

return lerp( d2, d1, h ) - k*h*(1.0-h);

}

//Transform functions

float3 transformPLW (FMaterialPixelParameters Parameters, float3 v)

{

return TransformLocalPositionToWorld(Parameters, v);

}

float3 transformPWL (FMaterialPixelParameters Parameters, float3 v)

{

return mul(float4(v, 1), GetPrimitiveData(Parameters.PrimitiveId).WorldToLocal).xyz;

}

float3 transformLW (FMaterialPixelParameters Parameters, float3 v)

{

return TransformLocalVectorToWorld(Parameters, v);

}

float3 transformWL (FMaterialPixelParameters Parameters, float3 v)

{

return normalize (mul(v, GetPrimitiveData(Parameters.PrimitiveId).WorldToLocal).xyz) ;

}

}; So far so good, the integration is far from over but the post is already long enough. In the future I plan to write a second part with the rest of the integration and on a way to expand the map function so we can edit shapes and its parameters within the material editor.

The result is so cool! Thanks for sharing.

ReplyDeleteThanks! <3

DeleteThanks for the great tutorial! Do you have any ideas on how to get shadows working? It seems to be almost there, but I think the SphereTracing code would need to take into account the light direction instead of the CameraVector. I tried branching the shader, in case the ShadowDepthShader is active like here: https://answers.unrealengine.com/questions/451958/opacity-mask-shadow-with-pixel-depth-problem-1.html

ReplyDeleteBut no luck so far.

Yes, that is inded the way to go.

DeleteThe theory is that you trace again the shape and get the pixelDepthOffset and the opacityMask using the light vector instead of the camera vector (for this you wouldnt need too many steps since the shadow dont need as much detail as the visible shape).

If I recall correctly I got it working with a directional light, but had trouble with any light, since there is a bug on the transform node when it comes to view space transformations. For one light, you can set a parameter from blueprint or use the AtmosphericLightVector node (the directional light in this case should be checked as sun light).

Also I think there is no need to do the shadow custom node since its already included in recent versions of unreal. Its called Shadow Pass Switch.

I will try to find time to write the second part and hopefully solve the light problems and get the various shapes working together. Than you for your comment!

Hi, thanks for the tutorial!

ReplyDeleteHow did you achieve the gif in the smooth union section?

You can just select and move the cube to interact with the sphere.

If you mean how to pick to arbitrary shape actors and get them to interact you will need to feed each position and shape type to the shader. A unified and "simple" way of doing that is what I was looking for at the end of the experient. But if you just need a bunch of shapes you can feed them with parameters in BP.

DeleteThe ones in the gif are done in the shader, just one cube in the middle and six spheres on the sides.